Paper Notes - Diffusion Policies as an Expressive Policy Class for Offline Reinforcement Learning

1 Introduction

Offline reinforcement learning (RL) faces a fundamental challenge: how to estimate the values of out-of-distribution actions, since we can’t interact with the environment directly. Several strategies aim to address this issue:

- Policy regularization: This limits how far the learned policy can deviate from the behavior policy.

- Value function constraints: The learned value function assigns low values to out-of-distribution actions.

- Model-based methods: These learn an environment model and perform pessimistic planning in the learned MDP.

- Sequence prediction: Treating offline RL as a sequence prediction problem with return guidance.

While policy regularization has shown promise, it often leads to suboptimal results. The reason? Policy regularization methods are typically unable to accurately represent the behavior policy, which in turn limits exploration and leads the agent to converge on suboptimal actions. In other words, for regularization to work, it needs to be able to faithfully capture the behavior policy.

Common regularization techniques like KL divergence and maximum mean discrepancy (MMD) often fall short in offline RL. These methods either require explicit density values or multiple action samples at each state, complicating the optimization process. This makes them less effective for offline RL settings.

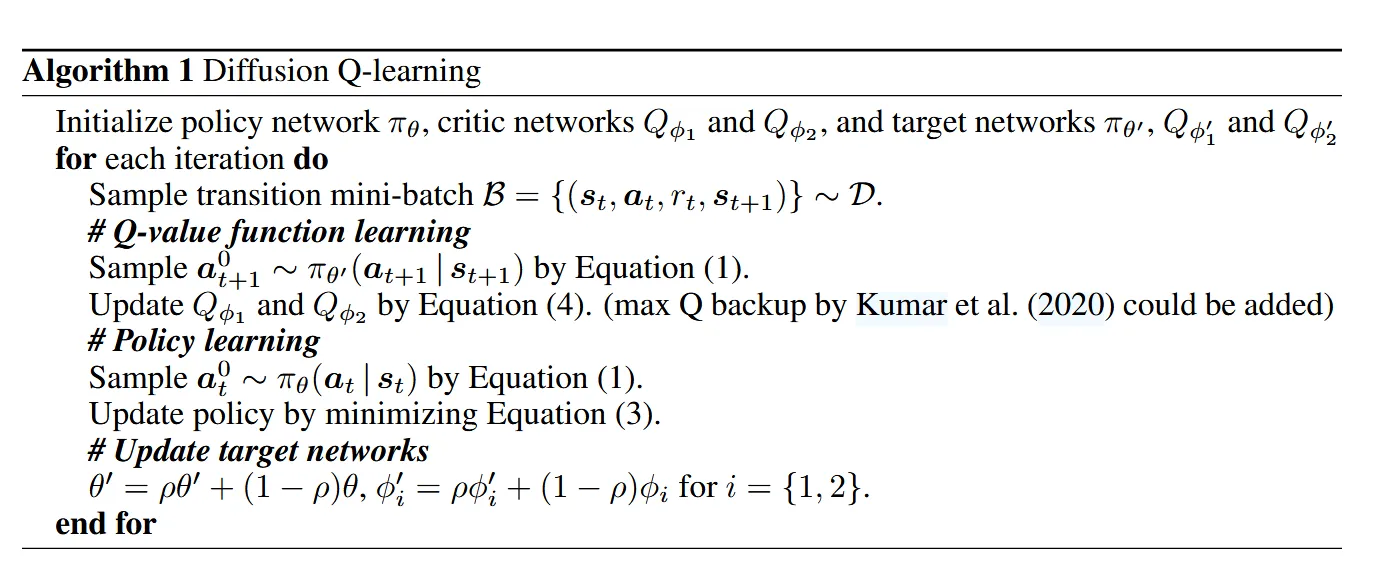

2 Diffusion Q-learning

2.1 Diffusion policy

We represent our RL policy via the reverse process of a conditional diffusion model as

where the end sample of the reverse chain, , is the action used for RL evaluation. Generally, could be modeled as a Gaussian distribution We follow Ho et al. (2020) to parameterize as a noise prediction model with the covariance matrix fıxed as and mean constructed as

We first sample and then from the reverse diffusion chain parameterized by as

Following DDPM (Ho et al., 2020), when is set as to improve the sampling quality. We mimic the simplified objective proposed by Ho et al. (2020) to train our conditional -model via

where is a uniform distribution over the discrete set as and denotes the offline dataset, collected by behavior policy

This diffusion model loss is a behavior-cloning loss, which aims to learn the behavior policy (i.e. it seeks to sample actions from the same distribution as the training data).

To work with small ,with and , we follow to define

which is a noise schedule obtained under the variance preserving SDE.

2.2 Q-learning

To improve the policy, we inject Q-value function guidance into the reverse diffusion chain in the training stage in order to learn to preferentially sample actions with high values.

The final policy-learning objective is a linear combination of policy regularization and policy improvement:

As the scale of the Q-value function varies in different offline datasets, to normalize it, we follow Fujimoto & Gu (2021) to set as , where is a hyperparameter that balances the two loss terms and the Q in the denominator is for normalization only and not differentiated over.

3 Policy Regularization

Diffusion Steps Overview

To effectively learn a distribution, the number of diffusion timesteps, denoted by , should typically be large (e.g., or for simple distributions). However, when applying Q-learning, a relatively smaller value of can still achieve satisfactory performance (or learn the optimal distribution).

It’s important to note that increasing strengthens the policy regularization. Thus, serves as a key trade-off factor between the expressiveness of the policy and the computational cost involved in training.

In these experiments, yields good results on D4RL (Fu et al., 2020) datasets. T

4 Experiments

Experimental details: We train for 1000 epochs (2000 for Gym tasks). Each epoch consists of 1000 gradient steps with batch size 256.